Linear Systems of Equations

Contents

15. Linear Systems of Equations¶

15.1. Gaussian Elimination¶

The technique studied here makes solving large systems of equations (e.g. 200 equations in 200 unknowns) practical, and it can be implemented on a computer in a basic programming language. You might be surprised to learn that it is necessary to solve vast systems of linear equations as a matter of routine in many practical scientific applications.

15.1.1. Motivation¶

Gaussian elimination is a systematic technique for solving systems of linear equations, which are of the form

where \(a_{i,j}\) are constants. Usually there are the same number of equations as unknowns, so \(m = n\). If \(m < n\) then the system is undetermined, if \(m > n\), the system is over constrained.

Example

Each equation here defines a line, and we are looking for a point which satisfies both equations, which means that the lines intersect.

From the first line we obtain \(x_2 = 4 x_1 - 1\), and by substituting this into the second line, we obtain \(x = \frac{3}{2}\), \(y = 5\). Two equations with two unknowns will always give a unique solution, unless the lines are parallel (and so the equations are just a scaling of each other).

If they are parallel and distinct, there are no solutions because there are no points that lie on both lines.

If they are parallel and coincident (same line), there are an infinite number of solutions.

15.1.2. A systematic technique for solving systems of equations¶

We will begin by finding a solution to the following system:

The equations have been labelled \(r_1,r_2,r_3\).

First, we will use \(r_1\) to eliminate \(x_1\) from \(r_2\) and \(r_3\). This gives two equations in two unknowns. Then, we will use \(r_2\) to eliminate \(x_2\) from \(r_3\).

The steps are written out below:

The solution for \(x_3\) can now be read off from \(r_3\), \(x_2\) can be obtained from \(r_2\) using the result for \(x_3\) and \(x_1\) can be obtained from \(r_1\) using the results for \(x_1\) and \(x_2\). This is known as back-substitution.

These manipulations can be conveniently done by looking only at the coefficients, which we collect together in a form called the augmented matrix:

We can see that the algorithm (described in the box below) works by eliminating the coefficients below the leading diagonal, which is highlighted.

Naive Gaussian elimination algorithm (obtaining upper triangular form)

Step 1 Choose initial pivot

We choose the first element from the leading diagonal as the pivot element.

Step 2 Row reduction step

Add multiples of the pivot row to the rows below, to obtain zeros in the pivot column below the leading diagonal.

Step 3 Choose new pivot

The pivot moves to the next element on the leading diagonal.

Repeat Repeat from Step 2 until the matrix is in upper triangular form (containing all zeros below the leading diagonal).

The solutions can then be obtained by back-substitution.

Exercise 15.1

Write the following system as an augmented matrix then solve it using the Naive Gaussian elimination algorithm.

15.1.3. Generalisation¶

The naive algorithm introduced here can be generalised to include additional row operations. In general, the acceptable row operations that we can perform are:

multiplication of any row by a constant (e.g. \(\frac{1}{2}r_1 \rightarrow r_1\))

addition of (a multiple of) any row to any other (e.g. \(r_2 + 2r_1 \rightarrow r_2\))

swapping any two rows (e.g. \(r_1 \leftrightarrow r_2\))

It is often possible to apply these steps creatively to get a result with greater efficiency than using the naive algorithm described above.

It is also not necessary to stop at upper triangular form. Once the last row has been fully simplified, it can be used to obtain zeros above the main diagonal in the last column. Then, the second-last row is used to obtain zeros in the second-last column above the main diagonal, and so-on until the only non-zero elements remaining are on the main diagonal. Then the solutions can be simply read off from each row. For instance, continuing with the naive row reduction for the example shown in the previous section, we obtain:

We have obtained row-reduced form and the solutions for \(x_1,x_2,x_3\) can now be read off from the final column.

15.2. Row Echelon Form¶

In this section we present an algorithm for solving a linear system of equations. The system algorithm works for any linear system of equations, regardless of whether there are zero, one or infinitely many solutions. It works by converting system of equations to reduced row echelon form.

Definition

A matrix is in row echelon form if:

All zero rows are at the bottom.

The first nonzero entry of a row is to the right of the first nonzero entry of the row above.

Below the first nonzero entry of a row, all entries are zero.

\(\star=\) any number

\(\boxed{\star}=\) any non-zero number.

A pivot is the first nonzero entry of a row of a matrix in row echelon form.

A matrix is in reduced row echelon form if it is in row echelon form, and:

Each pivot is equal to 1.

Each pivot is the only nonzero entry in its column.

\(\star=\) any number

Exercise 15.2

Which of the following matrices are in (a) row echelon form (b) reduced row echelon formed?

15.2.1. Gaussian Elimination for Reduction to Echelon Form¶

Row Reduction Algorithm (Gaussian Elimination)

Step 1 If necessary, swap the 1st row with a lower one so a leftmost nonzero entry is in the 1st row.

Step 2 Multiply the 1st row by a nonzero number so that its first nonzero entry is equal to 1.

Step 3 Replace all lower rows with multiples of the first row so all entries below this 1 are 0.

Step 4 Repeat Steps 1-3 for row 2, row 3 and so on.

The matrix is now in row echelon form. To convert it to reduced row echelon form:

Step 5 Replace all rows above with multiples of the final pivot row to clear all entries above the pivot.

Step 6 Repeat step 5 for each of the other pivot rows, working from bottom to top.

Example: Gaussian Elimination

Use Gaussian elimination to reduce the following matrix to reduced row echelon form:

Solution

First get a 1 pivot in the first row and zeros below in the same column:

We have zeros in the second column so the next pivot will be in column 3:

The matrix is now in echelon form. To achieve reduced row echelon form, we eliminate the values above the pivots:

Exercise 15.3

Use Gaussian elimination to reduce the following matrix to row echelon form and reduced row echelon form:

15.3. Parametric Solutions to a Linear System¶

The reduced row echelon form of the augmented matrix allows us to determine the solutions to the system. There are three cases:

1. Every column except the last column is a pivot column. In this case, the system of equations is consistent and there is a single unique solution. For example,

has the solution \(\pmatrix{x_1\\x_2\\x_3} = \pmatrix{5\\2\\-1}\).

2. The last column is a pivot column. In this case the system is inconsistent and there are no solutions. For example,

is equivalent to \(\pmatrix{x_1\\x_2\\0} = \pmatrix{0\\0\\1}\) which is inconsistent. There are no values of \(x_1, x_2\) and \(x_3\) which solve this system.

3. The last column is not a pivot column, and some other column is also not a pivot column. In this case, there are infinitely many solutions. For example,

has infinitely many solutions since columns 2 and 4 are non-pivot columns. The variables corresponding to a non-pivot column of the matrix are termed free variables. In the next section will explore how these solutions can be determined.

15.3.1. General Solution Set¶

The following system of equations,

is represented by the following augmented matrix,

which through Gaussian elimination may be reduced to the following echelon form,

corresponding to the following pair of equations:

Rearranging slightly,

For any value of \(x_3\), there is exactly one value of \(x_1\) and \(x_2\) that satisfy the equations. But we are free to choose any value of \(x_3\), and so we have found all the solutions: the set of all values \(x_1, x_2, x_3\) where

for any \(t \in \mathbb{R}\).

This is the general solution in parametric form of the system of equations, and \(x_3\) is a free variable.

In a later section we will see how to write this in vector form as follows:

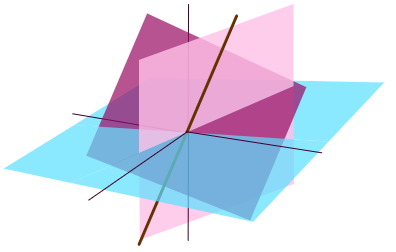

The solution set is a straight line.

Parametric solution to system of linear equations

Suppose we have a linear system of \(m\) equations in \(n\) unknowns. Determine the parameteric form of the solution set as follows:

Write the system of as an augmented matrix.

Use Gaussian elimination to reduce to reduced row echelon form.

Write the corresponding system of linear equations.

Move all free variables to the right hand side.

Example

Write the solution to the system represented by the following augmented matrix in parametric form.

Solution

The matrix is already in reduced row echelon form. The pivot variables are \(x_1\) and \(x_3\); the free variables are \(x_2\) and \(x_4\). It corresponds to the following equations:

Move the free variables to the right hand side to give the parametric solution:

for any \(x_2, x_4 \in \mathbb{R}\).

This is the equation of a (2d) plane.

Exercise 15.4

The reduced row echelon form of the matrix for a linear system in four variables \(x_1, x_2, x_3, x_4\) is

Identify the pivot variables and free variables.

Write the solution to the system in parametric form.

15.4. Solutions¶

Gaussian elimination

Back-substitution

The solution is \(\begin{pmatrix}x\\y\\z\end{pmatrix} = \begin{pmatrix}3\\2\\1\end{pmatrix}\).

1, 4, 5, 6 are in row echelon form.

1, 5 are in reduced row echelon form.

Row echelon form:

Reduced row echelon form:

1. The pivots are \(x_1, x_3\); the free variables are \(x_2, x_4\).

2. Write the system of equations:

In parametric form:

What happened to \(x_2\)? It is a free variable, but no other variable depends on it. The general solution is:

for any values of \(x_2\) and \(x_4\).